Cases

Project Overview: Brownfield Action 3.0

|

Partner(s):

Peter Bower Barnard College Access: Private Revised: August 2007 Released: September 2001 |

The Brownfield Action simulation is a central component of Professor Peter Bower's Introduction to Environmental Science Course at Barnard College. In this simulation, students are presented with maps, documents, videos, and an extensive network of scientific data to investigate a suspected contaminated land site. They assume the roles of environmental consulting firms contracting with a real estate developer to study the condition of the site and report on the feasibility of commercial construction.

First developed in 1999, Brownfield Action has been nationally recognized by the Association of American Colleges and Universities and was featured as a model curriculum at the Association's SENCER Institute (Science Education for New Civic Engagements and Responsibilities). Development of the simulation's most recent evolution is supported by a grant from the National Science Foundation, with the broader goal of disseminating the project to other colleges and universities.

Communication: Brownfield Action

CCNMTL, Professor Bower, and Dr. Cornelia Brunner, an independent evaluation consultant on this project from the Center for Children and Technology, worked in concert to research and develop a set of evaluation instruments that measure the effectiveness of BA as a teaching and learning tool in environmental science course curricula. Following a thorough analysis of its strategies and design, the evaluation was implemented at Barnard and two additional partnering institutions that have incorporated BA into their own unique courses.

Courses that underwent the BA evaluation include Environmental Site Assessment for civil engineering majors at Lafayette College, Professor Bower’s Introduction to Environmental Science at Barnard College for non-science majors, and an upper-level hydrology course taken primarily by environmental science majors at Connecticut College.

Research and Assessment

The pedagogy of the simulation emphasizes student-centered, inquiry-driven learning, team collaboration, and the synthesis of an interdisciplinary array of skills and knowledge required for mastery of environmental science concepts.

Evaluation of BA’s role in student learning sought to answer the following key questions:

- How successful is BA in promoting increased understanding of core concepts taught in the course?

- Does the student-centered, collaborative, and inquiry-driven pedagogy supported by BA affect students’ sense of responsibility for their own learning?

- What kind of learning is happening in the BA environment?

Our research and development work on evaluation instruments encompassed a range of approaches and tools designed to help answer the key questions. Concept mapping and the development of a scheme for content analysis of student work were two research methods explored for analyzing BA’s role in supporting an increased understanding of course concepts. Multiple revisions of a pre and post science survey were required to narrow down a question set that aimed to understand how BA affects students’ sense of responsibility and ownership for their own learning. Our critical incident report survey went through several design changes in order to specifically query as concretely as possible what elements of the simulation triggered a learning moment by the students.

After review of the various methods and results from the pilot evaluation, we found that the following suite of evaluation instruments provided the means for capturing a comprehensive, measurable assessment of the BA simulation in a variety of environmental science course contexts:

1. Content Analysis of student-produced reports based on their work with the simulation

To evaluate how well BA promotes increased understanding of the core concepts taught in the respective courses, we first explored use of a concept mapping exercise to capture and document students’ understanding of key concepts. However, for our research purposes, the coding process for assessing the quality of student maps in a uniform way proved challenging. After trying out the concept map instruments in the pilot evaluation, plans were adjusted to instead conduct content analyses of student final reports based on their work in the BA simulation.

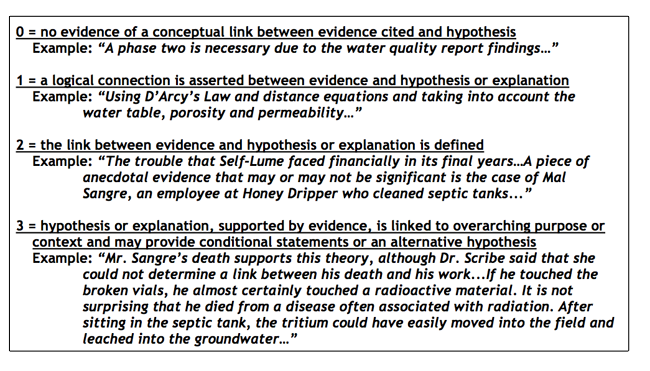

The portion of student reports we coded asked for a synthesis of both narrative-based and empirical evidence from the simulation. Specifically, students were asked to provide a scientific basis to support their hypothesis about an alleged contamination in the simulated town, including a description of the water table characteristics, direction of groundwater flow, and D’Arcy’s Law, and sediment analysis. The coding scheme consisted of four levels (see Figure 1).

| Fig 1. Four level coding scheme used in content analysis of student-produced reports based on their work in BA. |

Dr. Brunner then examined the outcome of our content analysis of the student reports and compared it to the grades they obtained in the class and the ways in which they discussed the use of the simulation in their learning from the Critical Incident Response surveys.

2. Pre-post science survey

The pre-post science survey was based on a much longer survey about student attitudes toward and beliefs about science developed at Columbia University by Dr. David Krantz in 2004 – 2006.

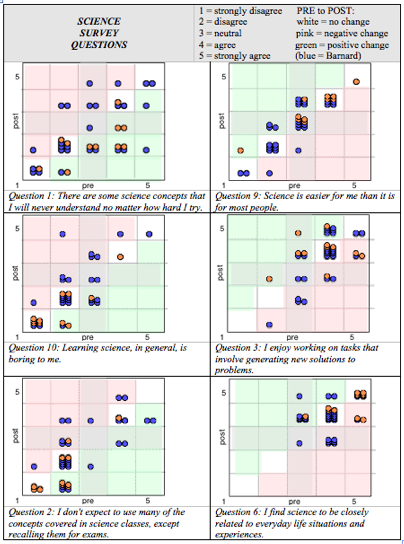

Our modified survey consisted of a dozen statements, half of which were about students’ personal relationship to science and the other half about the nature of science. There was a positive and a negative statement pertaining to each topic. Students were asked to rate their agreement with each statement on a five-point scale. We obtained pre and post class survey responses to evaluate how BA affects students’ sense of responsibility and ownership for their own learning. (see Figure 2).

| Fig 2. Comparison of pre- and post-test results evaluating how, if at all, does the simulation affect students’ sense of responsibility and ownership for their own learning. |

3. Critical Incident Report survey

We asked students to describe a moment when they found the simulation useful by telling us what they were trying to accomplish or understand, how using the simulation helped, and what would have been different if they used more conventional instructional materials. We also asked what else they wished they could do in the simulation and what bothered them about not being able to do it in this version.

The responses fell into four categories (see Figure 3) and allowed us to examine the types of learning successfully occurring in the BA environment: the simulation a) is a visual aid, b) serves to illustrate real life or a complex concept, c) allows for student action, or d) scaffolds learning.

| Simulation Use | Lafayette (n=25) |

Connecticut (n= 9) |

Barnard (n=28) |

|---|---|---|---|

| a) visualization aid | 24 | 0 | 20 |

| b) illustrates reality | 28 | 67 | 25 |

| b) illustrates concept | 4 | 0 | 20 |

| c) interact - technique | 0 | 0 | 10 |

| c) interact - skill | 28 | 67 | 25 |

| c) interact - fun | 8 | 0 | 5 |

| c) interact - skill | 28 | 67 | 25 |

| c) authentic experience | 8 | 11 | 5 |

| d) scaffold - easy | 0 | 11 | 5 |

| d) scaffold - clues | 4 | 0 | 0 |

| d) scaffold - info | 4 | 0 | 0 |

Fig. 3: Percent of students citing affordances of the simulation.

Results and Conclusions

In summary, the final evaluation report by Dr. Brunner considers how the simulation was used differently at Barnard, Connecticut and Lafayette Colleges, given the differences in the student body as well as course context and curricular goals of each course. Using the suite of evaluation instruments, Dr. Brunner was able to examine and identify elements of BA’s effectiveness as a learning tool across a multiplicity of environmental science courses.

Contact CCNMTL for more information about the final evaluation report which details the R&D work on the instruments, the evaluation implementation, and an analysis of the results. Much of this text was borrowed from Dr. Brunner’s final report to the National Science Foundation.

For more information about the Brownfield Action simulation, go to: https://brownfieldaction.org.